This section provides some advice on how to plan and design Iotellect deployment architectures. Specifically, it hints how to distribute system functions between individual servers and build reliable high-performance data transfer between them.

Architecture Planning

Note, that Iotellect is a complex platform and highly loaded applications designed atop of it won’t be perfect if relied on stereotypical patterns described here. Each high-load architecture requires careful thinking. We suggest Iotellect partners and customers to stay in touch with Iotellect team for validating complex architectures.

Defining Server Roles

Individual Iotellect server instances living in physical servers, VMs and containers are primary building blocks of every Iotellect installation. To simplify architecture design and license cost planning, we suggest dividing servers into several roles. There may be one or multiple servers in each role - for example a typical design scenario incurs multiple servers with edge server role and a single server with primary server role.

| Anywhere in this section, once we’re referring to an Iotellect server instance we hereby mean an individual server or a failover cluster that includes two or more servers. From architectural point of view, a failover cluster acts as a single server, just a one resilient to local hardware and software failures. |

Any increase of server count within an Iotellect installation, just like for any other software, means complication of the system and a raise of deployment and administration costs. Therefore, it’s always important to find a proper balance between monolithic and microservice architecture.

Strictly speaking, there are just a few fundamental reasons for splitting functionality into multiple Iotellect servers:

Geographical distribution of devices, data sources and server hosting points (i.e. datacenters and control rooms/cabinets)

Resource limits of each individual server, mostly CPU core count and RAM size, but also local disk space and network bandwidth

Ability of a “microservice” server to continue operations while neighbouring microservices are down, being restarted or being switched to failover

Let’s discuss those reasons separately.

Geo-based Role Distribution

If your devices live far away from the primary datacenter, it’s often feasible to install dedicated Iotellect servers close to the devices. There can be “pure” edge servers running on industrial PCs or edge gateways, or regular physical servers and even VMs, though located in a server rack or a small control room of a remote facility.

There are several benefits provided by a server geographically close to the devices:

Local device polling and control can be performed in a local isolated network segment via protocols that lack security and cannot withstand to protracted network outages, such as most industrial automation protocols

Collected data can be buffered, filtered, encrypted, and compressed before being sent to a higher tier server

In some cases, “raw” device data is actually never sent to another server, but rather processed locally and only valuable changes and events are forwarded “up”

Here are some examples of geo-distributed server locations:

An isolated humanless remote facility with an industrial edge gateway, such as a pumping station or a standalone electrical transformer

A shop floor, solar farm, or gas station that has field personnel for mundane operations but don’t have qualified engineers for investigating software incidents and making serious configuration changes

Resource-based Role Distribution

In a high-load system, any “central” server is normally involved into processing millions or even billions of events per day coming from thousands of devices and data sources, connected directly or indirectly.

This means that the sustained load on such a server is normally quite high, but at the same time quite stable since devices tend to offer data samples at fixed intervals.

At the same time, human operators working with your IoT/IIoT product or solution can often create huge spikes of load, especially during investigation of an incident that causes regular product users and even system administrators to run various reports and browse huge amounts of historical data.

If a “device-faced” server running at a high sustained load (e.g. high CPU load and memory usage) will be affected by such a “human action spike”, it may fail to continue regular monitoring and control operations, skipping new important device fleet events and situations.

Therefore, for highly loaded systems we generally recommend to split “device-faced” and “human-faced” functions into separate Iotellect servers:

Device-faced servers are responsible for direct or indirect (via lower-tier servers) device data collection, storage, and basic processing (filtering, enrichment, alerting, etc.)

Human-faced servers are performing operations initiated via your product or solution UI by users and operators, i.e. loading and processing historical datasets, filling printable reports, preparing data for complex dashboards, etc

There are also some operations that consume a significant amount of resources but are bound to incoming device data flows. Examples of such operations could be usage of ML-based trainable units, complex event processing, or network topology discovery.

We recommend to keep such operations on device-faced servers, since they don’t normally tend to introduce high and unpredictable load spikes. However, in very highly loaded systems such operations can be performed by the server(s) with a dedicated “advanced device data processing” role.

Availability-based Role Distribution

In a classic microservice architecture, each microservice is intended to continue operations while neighbouring microservices are down for a short (or sometimes even extended) amount of time.

| In case of an IoT/IIoT product, each microservice will have to exchange information with neighbouring microservices at a very high speed, often several millions of interactions per second. Imagine, that you have 10 000 devices with 10 metrics each, being polled with a 1 hz rate. There is also 10 simple alerts, each checking all metrics at the same 1 hz rate, just to verify they meet certain complex criteria and detect malfunction without delay to indicate it for system operators. In this case, the amount of checks per second will be 10 000 x 10 x 10 = 1 000 000 checks per second. If devices and alerts module reside on the same Iotellect server, each check is a simple in-memory object reference that comes at almost zero cost. However, if devices and alerts are separated into different Iotellect servers, each check will incur a local network query that implies data serialization/deserialization, encryption/decryption, transfer via operating system’s network stack, and even non-zero network delay. Regardless of protocol being used for server-to-server communications (i.e. be it the Iotellect protocol or HTTP/REST), and even if the platform will manage to “aggregate” 1 million checks to several thousand separate network operations, an additional system load caused by this rather simple alert checking will be high enough to drain a significant amount of resources on both “separated” servers. |

However, in contrast to regular microservices, Iotellect provides active-passive and active-active failover clustering, ensuring high availability of each individual server and allowing to minimize its downtime periods.

Therefore, we generally do not recommend to split functionality between Iotellect servers only for the sake of higher availability.

Instead, each Iotellect server those role assumes that whole system’s operation will be affected during its downtime should be implemented as an active-passive or active-active failover cluster.

Active-passive failover doesn’t really require any architectural planning. Just install a second failover server instance next to the master server instance and your downtime will be generally limited by tens of seconds (or a few minutes maximum, in very rare cases).

Active-active failover require a prior careful design of your application’s architecture and installation of one or more failover cluster coordinator servers. However, it pays back with downtime limited to a few seconds. This is generally acceptable for any IoT/IIoT product or solution type.

Configuring Server-to-Server Connections

With Iotellect, you have a vast amount of options that allow to transfer data and execute control operations from one server to another. This section helps to make proper choices on which server connection method to use.

Basically, all server-to-server connection methods are divided in three groups:

First server acts as a data source by running a special Local Agent device, while another server is acquiring data from the first one via Agent device driver

First server acts as a provider server and second one as a consumer server in Iotellect distributed architecture

Two Iotellect server exchange information via a third-party technology that is supported by Iotellect as both “server/sender” and “client/consumer” side. Such technologies include MQTT, HTTP, Kafka, OPC, IEC-104 or other protocols

Iotellect platform is designed so that all server-to-server connection cases can be successfully covered by first two scenarios (local agent / agent pair and distributed architecture) that both rely on Iotellect Protocol for establishing secure, compressed, buffered and highly efficient server connections with guaranteed delivery. Connections that use third-party protocols are available at partner/customer discretion but won’t normally provide any additional benefits.

The below table helps to choose a proper server-to-server communication method:

Local Agent - Agent | Distributed Architecture | |

|---|---|---|

Primary Purpose | Making one server act as a data source for another server. Forwarding device data from a lower-tier server to a higher-tier server for persistent storage. | Configuration and control of lower-tier servers from a higher-tier server. Ability of higher-tier servers to request raw and aggregated historical device datasets from storage facilities of lower-tier servers. |

Implementation | A server that uses a Local Agent exposes entities of selected local contexts as entities of an Agent device on a remote server, essentially becoming an Iotellect Agent for a higher-tier server. | A consumer server attaches selected context sub-tree of a remote provider server to its local context tree. Local and remote contexts within consumer server’s unified data model may be used uniformly. |

Device Data Residence | Usually higher-tier server | Usually lower-tier server |

Same-tier Server Connections | Normally not relevant | Normally used for interconnecting same-tier servers |

Network and Transport Protocol | TCP/IP | |

Data Encryption | SSL/TLS

| |

Data Compression | Yes, optional | |

Application Protocol | ||

Guaranteed Delivery | Yes | No |

Data Buffering/Queuing | Yes | No |

Note, that in many cases both connection methods can be used in parallel. In this case Local Agent - Agent connection is used to transfer device data between Iotellect servers while distributed architecture connection enables forwarding configuration and control operations.

Estimating Server Requirements

By this point you understand the number and roles of Iotellect server instances in your system. It’s now time to estimate system requirements for every server/VM/container, and primarily number of CPU cores, RAM size and disk storage space.

The Requirements section in Iotellect Server documentation provides detailed guidelines on server parameters assessment.

However, unfortunately, due to the complexity of solutions built atop of Iotellect, there’s no straightforward way to calculate required resources precisely. This is similar to code-first development using classic technology stacks (such as Java + JavaScript), where required resources are a very complex function of the code quality and system load. Similarly, performance of your servers may significantly depend on the actual low code logic of your app. You might need to implement some performance optimizations, such as caching intermediate retrieved/calculated values, to ensure high performance of your solution.

Here are some more hints that may be useful for resource estimation:

The number of required CPU cores has most correlation with the number of events handled by the core of Iotellect Server. To estimate the number of those events, make sure to remember that a change of a variable is also an event. Thus, take into account events and variable value changes generated by local device accounts, events and value changes forwarded from other servers, as well as events and variable value changes generated locally (e.g. by models, correlators, etc).

The amount of required RAM has most correlation with the number of contexts in your server. Each context, be it a device context, a model context, or a user context, is a complex object with some internal caches, queues, and other memory-sensitive elements. Therefore, servers with 10 and 100 000 devices will require different amount of RAM even if the total number of events per second generated by those device fleets are roughly equal.

The amount of disk space required by Iotellect Server itself it not large. Briefly speaking, any modern SSD drive with several hundred gigabytes will be enough to host the server itself. However, Iotellect storage facility requires amount of storage space highly correlated with the number of historical events and value changes configured for persistent storage, as well as storage (expiration) period and size of every data sample. Thus, to have a very brief estimation of required storage space, multiply the number of persistent daily events by the length of storage periods (obviously, in days) and by the average disk footprint of every sample. This footprint size is a few hundred bytes for simple events/changes with scalar values, but can grow to multiple kilobytes if data samples come with tabular or hierarchical payloads, such as JSON documents. Make sure to remember that disk space requirements are relevant for the server that runs the selected database engine, which is not necessarily the Iotellect server machine.

It’s generally a good decision to increase the amount of resources evaluated according to recommendations of Requirements section in case if project budget makes it hard to request additional resources at later stages of system implementation. The more complicated system design is the higher should be the percent of additional resources requested to mitigate possible performance bottleneck risks.

Typical Server Roles

Although the architecture of every Iotellect-based product and solution is unique, there are typical design and implementation patterns shared by multiple solutions. In particular, there are some typical Iotellect Server roles that imply certain functions within a whole system and certain features used for making them operable.

Here are the most commonly used server roles:

All-in-one server. This is a single Iotellect server that hosts the whole IoT solution or product. It covers all aspects of system operation starting from device communications up to hosting user connections via embedded web server. In most cases, an all-in-one server stores data via an embedded database. In other cases, database server runs on a dedicated machine.

Collector server. That’s a quite typical role of an Iotellect server running on an IoT edge gateway, or a regular machine geolocated right next to the devices. Collector performs device communications via various drivers but doesn’t normally store the data locally. Instead, data is routed to another server (typically installed in a datacenter) via Local Agent driver which leverages buffering to handle communication failures. Collector server may be controlled and configured either centrally via distributed architecture or locally via an embedded web server. A collector server is sometimes called a Probe server since it can perform monitoring operations in its local network segment and upload measurement data to the cloud.

Storage server. Storage server is some kind of a hub that received data from collector/probe servers, pre-aggregates it, and stores in some kind of a high-performance database. Since storage servers are often used in high load installations, a storage server is most likely to use an external database cluster rather than an embedded database instance.

Analytics server. Such a server is normally responsible for data analysis via machine learning, complex event processing, Iotellect query language and other advanced analytics modules. It can perform on-the-fly analysis by picking up device data streams directly from collectors/probes or redirected by a storage server. Analytics server can hold large datasets for in-memory analysis or periodically load them from a storage facility. Processed data (results) can also be either stored in-memory for fast presentation within operators UIs or saved back to the database.

Web server. A server in this role is primarily hosting the embedded web server engine. It can act as a standalone web server, being only responsible for servicing web-based operator connections and redirecting all operations to other servers. In other cases, it can be a more “regular” Iotellect server combining web user session hosting with some other activities.

Horizontal cluster’s primary node, coordinator node, application server, and login server. These server roles are specific to the Horizontal Cluster deployment architecture. The purpose of horizontal cluster is ensuring unlimited automatic horizontal scalability of the system by providing serverless architecture. A very simplified version of horizontal cluster (with only two primary nodes and one or two coordinator nodes) allows implementing active-active failover scenario. See Horizontal Cluster section and Configuring Servers for Active-Active Failover tutorial for more information about these server roles.

| Note that each Iotellect server in a distributed installation may have two independent roles: its functional role (one of the described above, or a custom one) and its failover role (i.e. master or slave for active-passive failover scenario). For example, a failover master collector server is acting as a data collection hub, but if it’s down for any reason its functionality is backed by failover slave collector server. |

Typical Deployment Architectures

This section describes most typical Iotellect deployment architectures. The majority of products and solutions based on the platform use one the below architectures in unchanged or slightly changed state. Only a relatively small percent of large-scale and highly loaded products/solutions require designing and implementing unique architectural schemes.

Monolithic

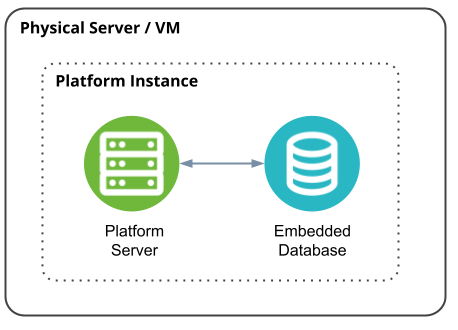

This the simplest possible Iotellect deployment architecture. It’s the only one that requires a single physical server or virtual machine. However, due to the high efficiency of the platform, it allows implementing quite complex products/solutions with reasonably high load.

The deployment scheme comprises a server or VM that runs both Iotellect server and database engine. The latter is normally running in embedded format, requiring no dedicated deployment and administration efforts.

Since no failover method is implemented here, such an installation requires proper backup and restoration policies.

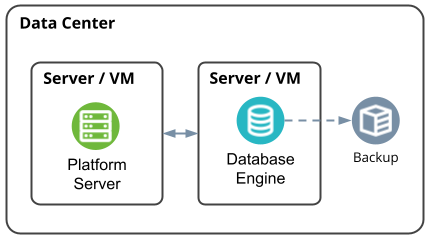

Platform Server + Storage Server

This deployment scheme requires two servers/VMs - one for Iotellect server instance and another for database engine instance. Both machines should be located in the same datacenter and connected by a high-speed network (1 Gbps or higher) due to high intensity data exchange between Iotellect server and its underlying database.

In this scenario disk space requirements should be applied to the storage server. The Iotellect server machine should use a high-speed SSD-backed storage that ensures faster startup.

Despite two servers being used, no failover method is implemented in this deployment scheme. It requires proper backup and restoration policies.

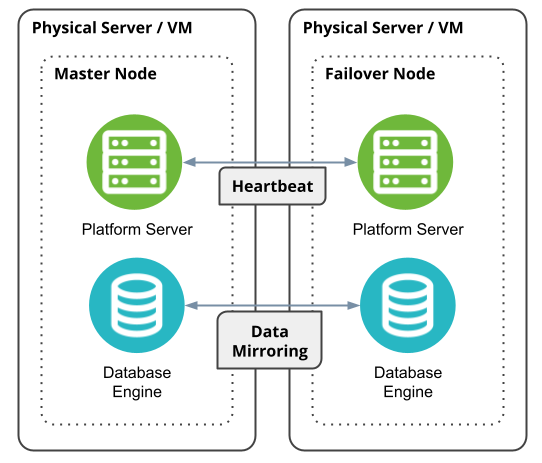

Active-Passive Failover Cluster

This scheme leverages two cross-functional physical servers or VMs, both shared by an instance of Iotellect server and an instance of database engine.

Iotellect server instances running on both machines are members of active-passive failover cluster: one is the master node and another is the failover node.

Two database engine instances residing on the same machines are nodes of database-specific failover cluster. For example, if Apache Cassandra database is being used, Cassandra servers replicate (mirror) all data between them, ensuring storage failure protection. Disk space requirements should be applied each of the server machines (and are effectively doubled) in this case.

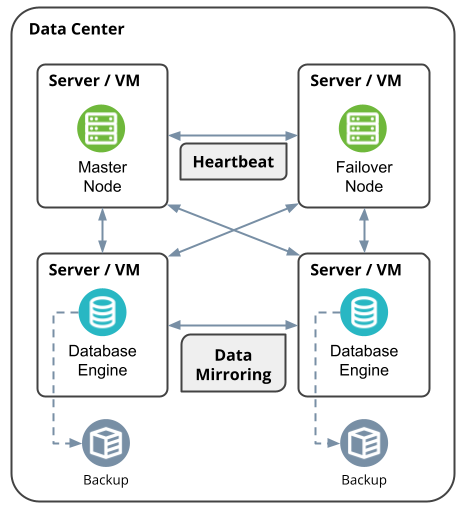

High-load Active-Passive Failover Cluster

This scenario requires four servers/VMs. It’s a highly available Iotellect installation capable of coping with the highest load that can be handled without splitting roles of servers residing in the same datacenter.

The only difference from the above scenario is that each Iotellect and database server instance occupies a dedicated machine. No CPU/RAM resource sharing between Iotellect and its storage engine ensure higher overall performance, especially during load spikes that occur during system startup, mass device reconnection after a network failure, or simultaneous resource-intensive activities or multiple users/operators.

Active-Active Failover Cluster

Active-active failover is an advanced technology that relies on Iotellect horizontal cluster. Allowing to shorten failure downtime from tens of seconds to mere seconds, it requires careful (and sometimes challenging) planning and adoption of your product/solution architecture to the rather complicated reality of horizontal clustering paradigm.

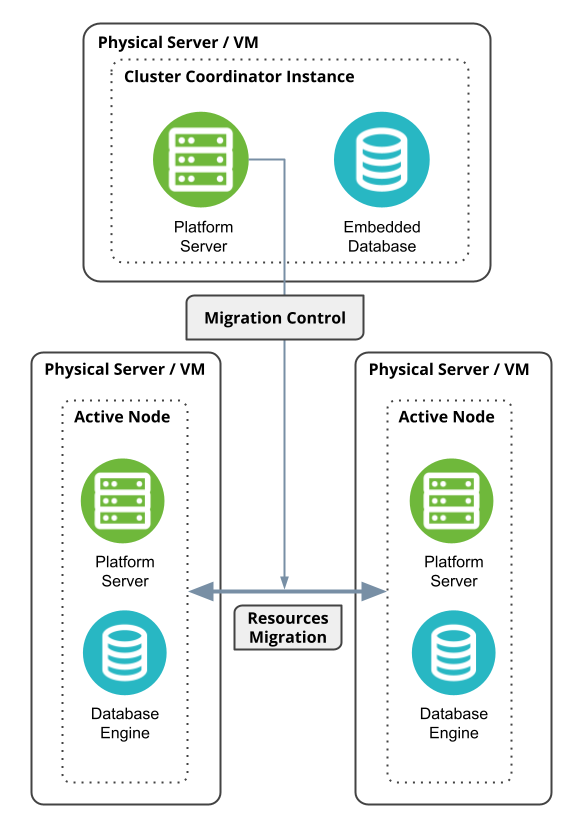

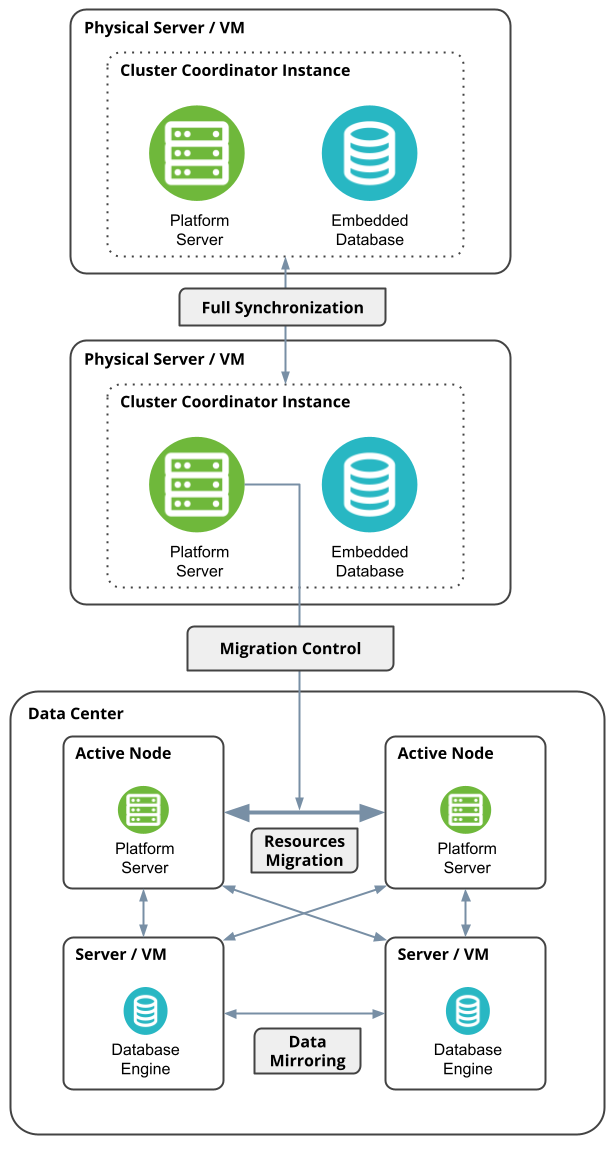

Active-active failover cluster architecture is derived from either two-node Active-Passive Failover Cluster or four-node High-load Active-Passive Failover Cluster, depending on expected system load. One or two Cluster Coordinator servers/VMs are added to the node structures described above, forming the following list of options:

3-node: two shared Iotellect+database servers and a non-replicated cluster coordinator server

4-node: two shared Iotellect+database machines and two replicated cluster coordinator servers

5-node: two Iotellect servers, two database servers and a non-replicated cluster coordinator server

6-node: two Iotellect servers, two database servers and a two replicated cluster coordinator servers

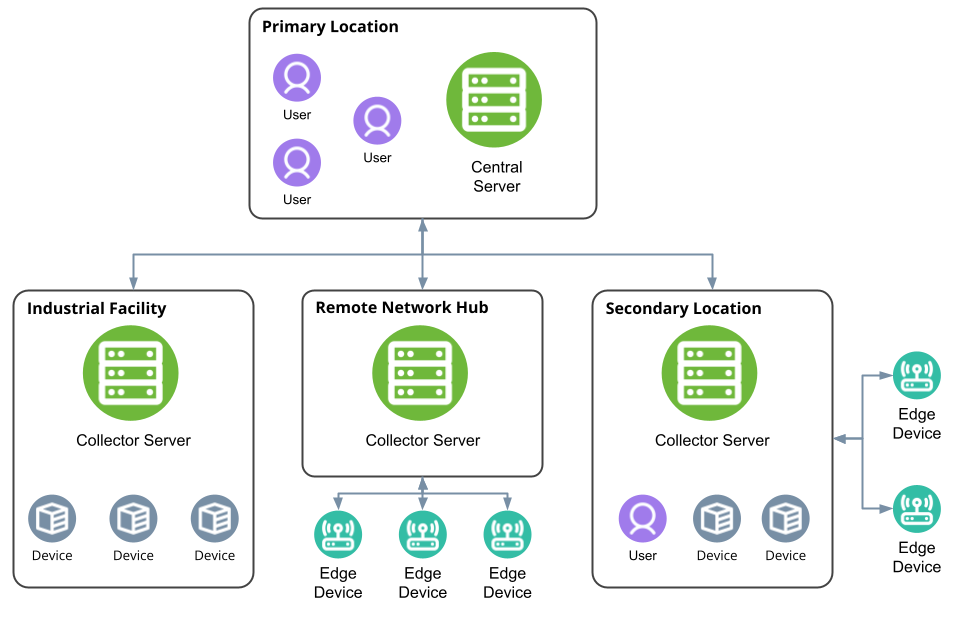

Distributed Two-Tier

This is a geographically distributed deployment scenario with two server roles: one or more collector/probe servers and one central server.

| It’s very important to understand the meaning of a server here and in other distributed deployment scenarios. A server with a specific role is a logical element of the deployment scheme that may be represented by multiple physical servers or VMs deployed in one of the scenarios described above: from Monolithic to Active-Active Failover Cluster. Therefore, a server of a multi-role distributed installation may comprise from one to six physical or virtual server machines. |

In this two-tier scenario, the central server is normally installed in company’s primary datacenter, while collector/probe servers are located near devices they monitor or service.

Collector/probe servers are normally Monolithic-type Iotellect installations running on Edge gateways, industrial PCs, or regular physical servers installed in a server rack within a remote facility.

However, in some cases collector/probe servers are also highly-loaded servers installed in company’s secondary datacenters that service certain parts of a large industrial/IT infrastructure. In this case collector/probe servers can leverage “Platform Server + Storage Server”, “Active-Passive Failover Cluster”, “Active-Passive Failover Cluster” or “Active-Active Failover Cluster” schemes.

The central server of such a two-tier installation is normally experiencing high loads and requires high availability. At the same time, this server isn’t involved in near-real-time monitoring, industrial supervisory control, or other time-sensitive operations. Therefore, it’s not feasible to deploy it in Active-Active Failover Scenario.

As a result, the central server is normally using “Active-Passive Failover Cluster” or “High-load Active-Passive Failover Cluster” deployment architecture.

High-Load

The obvious bottleneck of the Distributed Two-Tier deployment scenario is its central server. In a large system with over several hundred thousand devices the central server needs to handle up to a million or even millions of events per second.

In systems of such scale, the central server needs to be split into several roles, either some of the described above (storage server, analytics server, web server) or custom ones.

| Note that each server in this scenario is also a logical element of the system that may be backed by one or several physical or virtual machines deployed in a certain variation, from Monolithic to High-load Active-Passive Failover Cluster. Active-active Failover is not an appropriate architecture for the central servers, since they are not involved into near-real-time operations. It may still be implemented but will, in most cases, be a serious over-engineering. |

Horizontal Cluster

Horizontal cluster is the most advanced Iotellect deployment scenario. It’s a serverless architecture allowing to auto-scale an Iotellect installation with unlimited number of devices and users.

Due to its complexity, horizontal cluster architecture should be planned in cooperation with Iotellect team.

Horizontal clustering technology is described in a separate section.

Was this page helpful?